Following my last article covering the Women 4 Artificial Intelligence Conference, I wanted to go more in detail into ways in which gender bias in tech and AI actually manifests in real-world situations. The conference was useful in understanding how using the RAM tool (Readiness Assessment Methodologies) helps you discover the current state of the country and the level of preparation to sustain a legal, political, and social change that favors ethical AI usage. But what is it actually that we need to prepare for?

When I first learned about gender bias in tech

The first time I learned about gender bias in machine learning processes was about 7 years ago. I was conversing with my Bangladeshi friend, Imran, about the differences in our languages, when he told me a “fun fact”. Different from Romanian, which is a Latin-based language, the Bengali language does not have gender. The fact in itself did surprise me as I knew many other languages simply do not use gender to structure and differentiate their syntax. However, he then showed me a quick exercise using Google Translate, he sent me a screenshot of a Bengali text, usual phrases such as – “They are an architect. They like to cook. They take care of the children. They are the boss. They are powerful.” and translated these to English. To my surprise, even though the phrases did not hold any pre-assigned gender to all of these attributes/actions when translated into English, they became “He is an architect. She likes to cook. She takes care of the children. He is the boss. He is powerful.”

I remember being quite upset at this finding – How come Google translates it this way? Why would it do such a thing? It’s technology, it should be neutral. I was just young, and still so sensitive to such issues, without having a deep understanding of how these technologies work. Now I understand better, but it still does not make it acceptable.

former studies about gender and tech

Many studies look at the relationship between humans and technology, one of the first ones was Orlikowksi’s work in 1992, where she introduced the concept of the “duality of technology” which is “physically constructed by actors working in a given social context, and actors socially construct technology through the different meanings they attach to it and the various features they emphasize and use” (p. 406).

Similarly to Orlikowski, Fountain positions technology intersecting human agency, but he places this in an institutional and organizational context “the effects of the Internet on government will be played out in unexpected ways, profoundly influenced by organizational, political and institutional logics” (p. 12). His framework highlights how institutions both influence and are influenced by ICTs and predominant organizational forms (p. 89).

He distinguishes technology into two forms: objective (the hardware, Internet, software, etc) and enacted (“the perception of users as well as designs and uses in particular settings” p.10). The author concludes that the outcomes of technology enactment are multiple and unpredictable as they result from a complex interflow of logic, relations, and institutions. AI is an “objective technology”, but once it is put in use, it influences and becomes influenced by human agency and different forms of organization and arrangements, which lead to unexpected consequences. As long as gender biases are implicit in our society and culture, they’ll just naturally become the contextual factors that make AI reproduce the same biases.

Gender Bias in Language

Bias is often shown through language. The AI service “Genderify” which was launched in 2020, uses a person’s name, username, and email address to find out the gender of that person (Vincent, 2020). Using the service, they quickly noticed that names beginning with “Dr” were consistently flagged as male doctors, for example, the name “Dr Meghan Smith” was assigned with a 75% likelihood of belonging to a male person. Hundt (2022) found that automated robots that were trained on large datasets and standardized models, showed strong reinforcement of stereotypical and biased behavior in regard to gender and race.

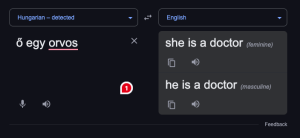

In 2018 a study was held by the Federal University of Rio Grande do Sul in Brazil to test the existence of gender bias in AI, and specifically automated machines of translation (Prates et al. 2020). As my friend, Imran, ran the Google translate test with me, they did the same by running some sentence constructions from English into twelve gender-neutral languages (Malay, Hungarian, Swahili Bengali, Estonian, Finnish, Japanese, Turkish, Yoruba, Basque, Armenian, and Chinese). They found that the machine translation showed a strong bias towards male attributes, also especially for fields such as STEM (which is already viewed as more male-oriented than female-oriented). At the time of the study- 39,8% of women were working in “management”, but sentences that were related to management positions were translated with a female pronoun on 11% of the time- the translations did not even reflect real-world statistics. When they ran the same test but this time with adjectives, they found that words such as “shy”, “attractive”, “happy”, “kind” and “ashamed” tended to be associated with female pronouns, while “cruel”, “arrogant”, “guilty” associated with male pronouns.

Soon after their research was published, Google released a statement when they admitted that their machine presents gender bias, and they later released a new feature that offers both gender translations.

In 2021, Google Translate also released the “Translated Wikipedia Biographies” dataset, which measures gender bias in machine translation, through which they state that they can reduce errors by 67%.

Gender bias in imagery

Gender bias in visual imagery is also nothing new, and something that has been of my interest for some time. “Bias in the visual representation of women and men has been endemic throughout the history of media, journalism, and advertising” says Schwemmer et al. (2020). Gendered representation has been present in many forms and contexts of imagery – science education resources (Kerkhoven et al. 2016), commercial films (Jan et al. 2019), Iranian school textbooks (Amini and Birjandi 2012).

The website Gender Shades was created after a pivotal study carried out by Joy Buolamwini of MIT and Timnit Gebru from Microsoft Research in 2018. They started their study by emphasizing the use of facial recognition tools in public administration capacities and criminal justice systems. They raised the concern that these technologies are not neutral and can threaten individuals’ civil liberties (Boulamwini and Gebru 2018:2) including “economic loss”, “loss of opportunity” in hiring, education, housing, “social stigmatisation” by reinforcing stereotypes that, in the end, affect both the individual and the collective.

The study revealed intersectional errors in the software, showing less accuracy in identifying women than men, and faulty identification for darker-skinned people compared with light skinned people (with darker-skinned women being most likely to be misclassified.)

To conclude

Finally, AI machines not only reflect all these biases, but they can “get caught in negative feedback loops” (Busuioc, 2021) which then become the base for future predictions, especially if the initial data set was biased. Bias mitigation involves “proactively addressing factors which contribute to bias” (Lee et al.2019), and is usually associated with the concept of “fairness”. Through mitigation techniques such as rebalancing data, regularisation, and adversarial learning AI bias can be reduced, but a strong political and institutional structure is still needed to really support this change.

References

Amini M, Birjandi P (2012) Gender bias in the Iranian High School EFL Textbooks. Engl Lang Teach 5(2):134–147

Buolamwini, J., & Gebru, T. (2018, January). Gender shades: Intersectional accuracy disparities in commercial gender classification. In Conference on fairness, accountability and transparency (pp. 77-91). PMLR.

Busuioc M (2021) Accountable artificial intelligence: Holding algorithms to account. Public Adm Rev 81(5):825–836

Fountain JE (2004) Building the virtual state: Information technology and institutional change. Brookings Institution Press

Hundt A, Agnew W, Zeng V, Kacianka S, Gombolay M (2022, June). Robots Enact Malignant Stereotypes. In 2022 ACM Conference on Fairness, Accountability, and Transparency (pp. 743–756)

Kerkhoven AH, Russo P, Land-Zandstra AM, Saxena A, Rodenburg FJ (2016) Gender stereotypes in science education resources: A visual content analysis. PLoS ONE 11(11):e0165037. https://doi.org/10.1371/journal.pone.0165037

Lee, NT, Resnick P, Barton G (2019) Algorithmic bias detection and mitigation: Best practices and policies to reduce consumer harms. Brookings Institute: Washington, DC, USA. https://www.brookings.edu/research/algorithmic-bias-detection-and-mitigation-best-practices-and-policies-to-reduce-consumer-harms/.

MIT Media Lab-b (2018) Gender Shades Project: Why This Matters. https://www.media.mit.edu/projects/gender-shades/why-this-matters/.

Orlikowski WJ (1992) The duality of technology: Rethinking the concept of technology in organizations. Organ Sci 3(3):398–427

Prates, M. O., Avelar, P. H., & Lamb, L. C. (2020). Assessing gender bias in machine translation: a case study with google translate. Neural Computing and Applications, 32, 6363-6381.

Schwemmer C, Knight C, Bello-Pardo ED, Oklobdzija S, Schoonvelde M, Lockhart JW (2020) Diagnosing gender bias in image recognition systems. Socius 6:1–17

Stella R (2021) A Dataset for Studying Gender Bias in Translation. Google AI Blog. https://ai.googleblog.com/2021/06/a-dataset-for-studying-gender-bias-in.html.

Vincent J (2020) Service that uses AI to identify gender based on names looks incredibly biased / Meghan Smith is a woman, but Dr. Meghan Smith is a man, says Genderify. The Verge. https://www.theverge.com/2020/7/29/21346310/ai-service-gender-verification-identification-genderify.

Jang JY, Lee S, Lee B (2019) Quantification of gender representation bias in commercial films based on image analysis. Proceed ACM on Human-Comput Interact 3:1–29

Feature image: Alan Warburton, CC BY 4.0 , via Wikimedia Commons