Hi again, as mentioned in my introduction post, I’m using this blog to explore my doubts when it comes to the rapid development of big data and its impact on social justice and equality. This time, I’m sharing some reflections after having read UN Habitat and Mila’s (Quebec Artificial Intelligence Institute) recently released report on AI & cities, and I’m hoping you will continue the discussion by also sharing your own thoughts.

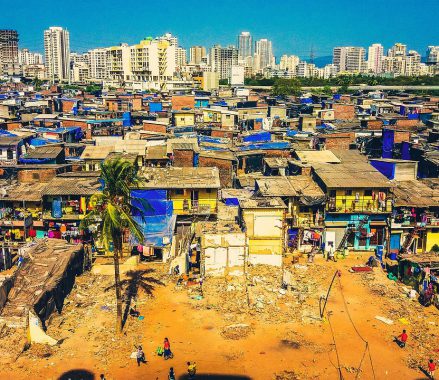

The report zooms in on cities, which from an ICT4D perspective (Information and Communication Technology for Development) is not such a bad idea considering the growing inequalities within cities in the Global North, the extensions of slums in the Global South, and cities being one of the main experimental sites for new uses of big data. It’s a fascinating read, full of insights from the latest advancements, with the authors’ vision of a future where the use of big data through “responsible AI” is not only upholding but also enhancing the values stated in the human rights and the SDGs (Sustainable Development Goals) through “vibrant AI-powered cities that are climate-conscious, socially just and designed for all”. However, being the skeptic I am, I can’t help but read in a considerable dose of technological determinism (i.e., believing it’s technology that will “save us”) into these words.

Despite this optimism, I must admit the report is not late to also raise critical concerns regarding potential risks, such as widening of the digital divide, rights abuses, and the perpetuation of historically discriminatory practices through the use of biased data. One of the main messages is that if such ethical issues are left unaddressed it can lead to widespread, negative (but many times unintended) consequences of emotional, behavioral or physical societal harm, e.g., in the case of predictive policing disproportionately targeting poor neighborhoods.

The role of private corporations

One of the key challenges I can see in the report relates to accountability in public-private partnerships. Because of the skills (e.g., AI experts), infrastructure (e.g., storage on data servers) and amounts of data (e.g., from social media, telecom operators or online sharing platforms) needed, these partnerships are in most cases inevitable, not least in the Global South. The fundamental issue is that private corporations ultimately are driven by their shareholders’ agendas which are not always aligned with the interests of the general public. But there are also less obvious concerns. One of these relates to the issues of power bias that is also discussed in a post by my fellow blogger Sara B, since a small number of global frontier firms located in a few powerful countries serve the entire global economy. The general risk of an AI system not reflecting the needs of all impacted communities because of a lack in team-diversity (gender, cultural, educational etc.) and inclusivity (decision-making power from all relevant stakeholders), is higher in these firms where design teams “tend to be small and composed of men of middle to high social status” (to cite the report). My own experience, working close to similar types of teams as a user experience researcher and struggling to make the process more participatory (but mostly only managing to reach very shallow levels of system usability instead of usefulness from a wider society perspective), tells me that there’s a big risk that innovation is driven from the life worlds of people in the most privileged parts of the world. This, in combination with the digital divide effect of less data being gathered in the Global South, implies a considerable risk for these countries to be negatively impacted by faulty AI systems that are not optimized for the local context and ultimately perpetuates existing inequalities.

Considering this, and being the social anthropologist I am, I can’t help but see how these set-ups seem to lack some of the most important aspects from qualitative research methods. I’m foremost thinking about the importance of contextual factors in data interpretation, a reflexive approach explicitly analyzing the assumptions made by the ones building the AI system, and a more critical approach that considers the effects on social justice and equality.

According to the report, private corporations are more and more encouraged to commit to “responsible AI”, as defined in the Montreal Declaration for responsible AI and the UNESCO Recommendations on AI Ethics. One example of this would be the Partnership on AI (including e.g., Google, Apple, IBM, Meta and Microsoft) with the vision of a “future where Artificial Intelligence empowers humanity by contributing to a more just, equitable, and prosperous world”, or events such as the very recent Microsoft Research Summit 2022 where the aim was to “spark conversations around how to ensure new technologies could have the broadest possible benefit for humanity.” Having worked in a similar company, I can’t help to relate this to internal and external marketing and corporate social responsibility campaigns. But I might be too skeptical here, I mean, they are really trying, aren’t they? So why am I not convinced?

Can an ethical practice be ensured?

The report holds that with the evolution towards such a “responsible” private sector, together with a solid ethical framework for the uses of big data and AI (as the one proposed in the report), the future’s looking bright. At the same time, I’m left with a feeling that what I just read is a somewhat desperate attempt to help governments and civil society keep afloat in their struggle to be on top of a fast-paced development run by some big private corporations (be they “responsible” or not).

Some of the key questions that have to be more frequently asked are: 1.) why big data is being used, 2.) how it’s gathered, used, stored and disposed of, 3.) who is designing the AI systems, and 4.) who is impacted by them.

Whereas the last three questions concern the degree of compliance to “responsible AI”, I find the first one to be perhaps the most crucial but also the one receiving the least focus (at least in this report). Here I’m going to leave you with one last reflection related to my own experience of working as a social scientist in the world of digital innovation. This experience tells me that the initial question of why a certain technology is the best solution to the problem at hand is overshadowed by the sheer excitement of the technological possibilities, blinding people into innovating without sufficient reflection and instead focusing on things like the “wow-factor” (a central aspect when prototypes of new applications are being prepared). It takes a lot for someone, in the midst of this excitement, to raise their hand and question the use of this technology in terms of wider effects on society, equality and social justice.

To conclude, with the risk of simplifying a whole lot, let’s say that the use of big data today is a battle between some private corporations looking for profit, some governments looking for power/control, and some other governments and civil society organizations looking to “do good”? If so, who is winning and who is losing? Who’s in the driver’s seat? And what would be the definition of “doing good”? I will be back with more reflections on these questions. Until then, I would love to hear your view.