Efficiency, automation, integration, innovation: information and communication technologies (ICT) promise all of these and more. More specifically, the field of data for development (D4D) “ascribe[s] power and agency to data as a development actor” (Cinnamon, 2020, p. 215).

How can data be a development actor? Cinnamon (2020) explains that in a development context, new data technologies are considered to have the potential to improve living standards and equality within and between nations. They can do this by “[enhancing] evidence-based decision-making, [strengthening] transparency and accountability, [and measuring] development progress” (p. 215). However, the academic discourse also acknowledges that data-driven technologies have the power to substantially increase inequality.

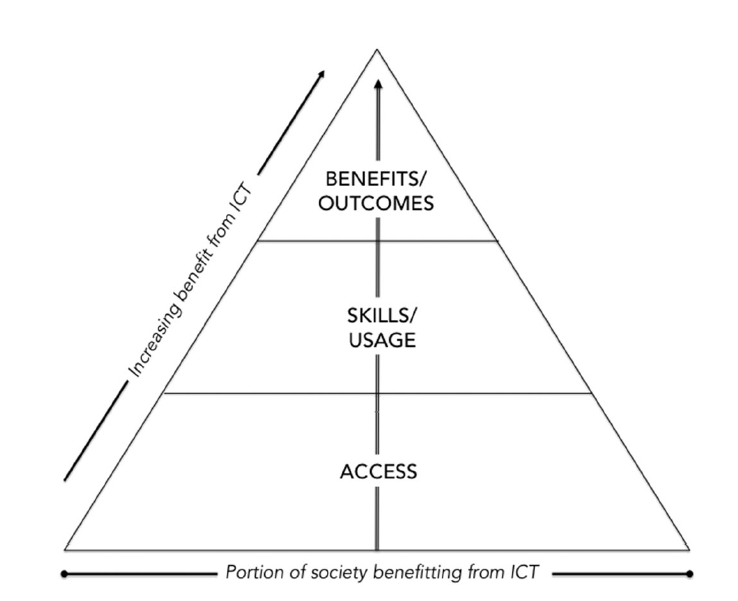

First we must understand the pathways through with D4D can affect equality. Cinnamon (2020, p. 216) characterises these as three levels of the digital divide:

- First-level digital divide – whether or not a community has access to information and communication technologies (ICTs) (for example, to computer hardware or the internet)

- Second-level digital divide – whether or not a community has the skills and ability to make use of ICTs to enhance social or economic capital

- Third-level digital divide – whether or not the community has the ability to convert the use of ICTs into some tangible benefit (for example, improved educational opportunities or livelihood).

Source: Cinnamon (2020), p. 217

Focusing on this third level, Walsham (2017, p. 22) argues that there is a difference between “ICT in developing countries” and “ICT for development” (ICT4D). Too often, development projects focus on the first and second levels, with the assumption that the third level – obtaining a benefit – will follow naturally. But the reality is much more complex.

So what should development workers look out for when it comes to understanding the potential impacts of data for development – for better or worse?

Four questions to ask about data for development

It’s easy to get swept away by the promise of new technologies, but we must always keep a critical eye. That’s what I mean when I suggest that being a techno-pessimist can be constructive. I’ve summarised four key things to think about when assessing the potential of data for development to drive positive social change.

1. Does it actually improve people’s lives?

According to Walsham (2017) and Sein et al. (2018), we still don’t have clear answers about the extent to which ICTs contribute to development, especially amongst the poorest people. This is partially an issue of scope and definition: the areas of D4D and ICT4D are broad and loosely defined. Existing definitions are not clear about the outcomes that the D4D or ICT4D are supposed to deliver for the people they are intended to support (Sein et al, 2018; Heeks, 2017). For example, a widely accepted definition of ICT4D comes from Richard Heeks:

“The application of any entity that processes or communications digital data in order to deliver some part of the international development agenda in a developing country” (2017, p. 10)

This definition requires an understanding of what is meant by ‘development’, which is a rabbit hole I will not go down for the purposes of this blog. Still, the focus is on delivering something to people in developing countries (whichever term you prefer to use for this), for their benefit. Precisely how we get from delivery to benefit is not always clear.

On top of this, the Global South is often treated as a laboratory for unproven new technologies (Fejerskov, 2017). Historically, “established technologies were transferred from rich to poor countries and perceived as the key element in catalysing economic and social progress” (Ibid., p. 948). Fejerskov argues that this results in “societal progress [being] pursued through precarious technological experimentation and intervention, the social and political consequences of which are rarely understood” (p. 948).

Experiments with cryptocurrency in the Global South are a great example of this. The link between cryptocurrencies and some tangible improvement in people’s lives, or a reduction in inequality, is tenuous at best (Henshaw, 2022). I explore this topic in more detail in my previous blog post.

2. Does it introduce bias?

Tools like machine learning and artificial intelligence (AI) conjure up images of sci-fi films and robots gaining sentience. But the reality is much more mundane than this. Machine learning “allows computers to generalise from existing data and make predictions for new data” (Paul et al., 2018, p. 12). AI goes “beyond learning and making predictions to create, plan or do something in the real world” (Ibid., p. 12).

There are many potential use cases for machine learning and AI. For example, they can make sense of large and complex datasets to identify patterns and flag, for example, warnings about incoming earthquakes; identify incidences of deforestation; recognise and diagnose crop disease; and even make predictions (Paul et al., 2018). The idea is that AI tools can potentially be more objective, are politically neutral, and can reduce human bias in decision-making.

These purported advantages make data-driven solutions a popular way to approach decision-making processes in a range of areas from insurance to health care to education (Birhane, 2019). This is because they appear to offer a way to address complex social problems without having to grapple with the systemic failures that cause them.

However, in reality, “algorithms are opinions embedded in code” (as Cathy O’Neil states in her book Weapons of Math Destruction) (Birhane, 2019). When looking at applications of machine learning and AI for development, we must consider who is doing the programming, and which groups the tool will affect. Because, as Abeba Birhane (2019) puts it, “AI systems reflect socially and culturally held stereotypes, not objective truths”. They are built entirely with past or existing data. Sometimes past trends can offer insights into future trends, but not always. After all, past performance is not an indicator of future success.

3. Does it reduce or remove people’s agency?

It has been widely established that technology is not neutral, and can “hold different meanings and purposes for people across cultures” (Bentley et al., 2017, p. 478). Technology can be used and experienced differently across cultures. And it might not be sufficiently responsive to the context-relevant problem it is supposed to address. It might even create new unintended problems that users are not equipped to navigate.

For example, take this study of mobile money users in Rwanda led by the Smart Campaign, a consumer protection initiative of the Center for Financial Inclusion (CFI) at Accion (Rizzi and Kumari, 2021). The study asked 30 Rwandan mobile money users about what they considered to be fair when it comes to the use of their data to, among other things, make decisions about credit-worthiness.

According to the study, “80% of respondents confirmed that they would trust a digital lender’s credit assessment over a human loan officer” (Rizzi & Kumani, 2021). This was because they perceived the digital lender to have less bias and to be less corrupt than human loan officers. However, when the researchers explained the types of data that were used to make credit assessments by digital lenders, users were shocked by the amount of their personal data that fed into these decisions. The researchers even needed to build extra time into the surveys to explain key concepts, such as the use of alternative data sources to determine credit worthiness, like utility payments and phone battery usage (Vinyard et al., 2021)*.

Users also universally called for better communication from digital lenders. For example, they explained that they were rarely furnished with reasons for rejecting a loan request. Users also noted that they had no option for recourse or to explain why they were unable to make a repayment.

If decision-making is removed from human hands, so is any flexibility to allow for individual circumstances.

4. Do the benefits accrue to people, or to businesses?

According to Birhane (2020), “data and AI seem to provide quick solutions to complex social problems”. Data-driven decision-making relies on scraping all kinds of data from users to understand their behaviour, and to make assumptions or even decisions on their behalf. The purpose of this is to save people and businesses time and make their lives easier. But as Birhane astutely points out, it also means that people are treated as data points. This data “uncontestedly belongs to tech companies and governments” (2020).

This becomes even more problematic when brought into the context of post-colonial societies: “this discourse of ‘mining’ people for data is reminiscent of the coloniser attitude that declares humans as raw material free for the taking” (Birhane, 2020).

Michael Kwet (2021) calls this “digital colonialism”, or “the use of digital technology for political, economic and social domination of another nation or territory”. The dominance of big tech – which owns not only the intellectual property but also the infrastructure that sustains computing power globally – “[entrenches] an unequal division of labour”. This dominance is consolidated mostly within the United States, though the data points – the users – are located globally.

Sometimes it is even more explicit than this. Kwet gives the example of Microsoft’s contracts with the South African government, providing many schools with its software ostensibly at low or no cost. But there is always a cost. This arrangement is very attractive for Microsoft for multiple reasons. For one thing, this conditions users to become long-term users of their products. Microsoft also collects longitudinal (meta)data on education software users which it shares with the government.

While this might seem benign and even helpful in the short-term, it raises questions of consent as well as concerns about user privacy, namely of children and their teachers. It could also facilitate (covert) government surveillance, especially in countries without strong data protection frameworks. This line of questioning opens a can of worms about data rights, ownership and even democracy. In the case of big tech, if the offer seems too good to be true, it probably is.

Reflecting on the blogging exercise

The past several weeks have offered an insightful opportunity to dig deeper into the literature and real-world examples of ICT4D, and the critical discourse surrounding them. Though I have to admit that I have left this exercise even more cynical than I was when I began.

Concepts such as machine learning, AI and digital financial inclusion are not new to me. In the context of development research, technology can offer valuable automation or insights. For example, when it comes to cleaning large datasets or deriving patterns from data that would be too time-consuming or expensive for humans to do themselves. There is a lot of potential for technology to bring improved convenience across many domains. This is not the part that makes me cynical.

What concerns me, and should concern us all, is Cinnamon’s observation that we need to be “wary of technological determinism, the belief that the march of technological progress is inevitable and can be harnessed for economic and social development” (2020, p. 229). Just because technology exists, doesn’t mean it will help to reduce poverty or improve lives in any meaningful way. Especially when the technology was pioneered in the Global North and reverse engineered for a new use case in the Global South, for which it is not fit for purpose or context.

Words like ‘disruptive’ and ‘revolutionary’ are thrown around a lot, especially in a Global North context where technological determinism seems entrenched. But unless the technology in question actually addresses systemic problems, this is just marketing. In addition, increased use of big data comes with an environmental cost that calls for caution (Corbett, 2018).

Another challenge is the sheer volume of content available on this topic, not just from academics but especially from NGOs, think tanks and private companies. I was co-responsible for monitoring The Data Kitchen’s twitter account for interesting developments in the #data4dev space, which was a little overwhelming. There is simply too much going on to engage with each of the sources in a meaningful way. My professional experience also supports this, with social media playing a role largely as a tool for promotion and branding rather than engagement and learning. This experience reflects Denskus & Esser’s conclusion that “dynamics of how international development is conceived and defined do not seem to be affected by social media to any significant degree” (2013, p. 418).

At the same time, data and technology are not going anywhere. Maintaining a critical eye when it comes to the promise of new technologies or data-driven solutions will only become more important, both within and outside the development space.

*Note that the author is employed as a Business Development and Communications Associate at Laterite, the company responsible for leading the data collection for the Smart Campaign project in Rwanda referenced above. The cover image for this blog was generated using DALL·E, an OpenAI tool.

References

Bentley, C. M., Nemer, D., and Vannini, S. (2019). ‘“When words become unclear”: unmasking ICT through visual methodologies in participatory ICT4D’. AI & Society, 34, pp. 477-493. DOI 10.1007/s00146-017-0762-z

Birhane, A. (2019). The algorithmic colonisation of Africa. Real Life Magazine. Available online: https://reallifemag.com/the-algorithmic-colonization-of-africa/

Corbett, C. J. (2018). ‘How sustainable is big data?’ Production and Operations Management, 27(9), pp. 1685-1695. DOI: https://doi.org/10.1111/poms.12837

Cinnamon, J. (2019). ‘Data inequalities and why they matter for development’. Information Technology for Development, 26(2), pp. 214-233. DOI: 10.1080/02681102.2019.1650244

Denskus, T. and Esser, D. E. (2013). ‘Social media and global development rituals: a content analysis of blogs and tweets on the 2010 MDG summit’. Third World Quarterly, 34(3), pp. 405-422. DOI: http://dx.doi.org/10.1080/01436597.2013.784607

Fejerskov, A. M. (2017). ‘The new technopolitics of development and the Global South as a laboratory of technological experimentation.’ Science, Technology & Human Values, 42(5), pp. 947-968. DOI: 10.1177/0162243917709934

Heeks, R. (2017). Information and communication technology for development (ICT4D). Routledge, London.

Henshaw, A. (2022). ‘”Women, consider crypto”: Gender in the virual economy of decentralised finance.’ Politics and Gender, pp. 1-25. DOI:10.1017/S1743923X22000253

Kwet, M. (2021). Digital colonialism: the evolution of US empire. TNI Longreads. Available online: https://longreads.tni.org/digital-colonialism-the-evolution-of-us-empire

Paul, A., Jolley, C., and Anthony, A. (2018). Reflecting the past, shaping the future: Making AI work for international development. USAID. Washington, USA.

Rizzi, A. and Kumari, T. (2021). Trust of Data Usage, Sources, and Decisioning: Perspectives From Rwandan Mobile Money Users. CFI at Accion. Available online: https://www.centerforfinancialinclusion.org/trust-of-data-usage-sources-and-decisioning-perspectives-from-rwandan-mobile-money-users

Sein, M. K., Thapa, D., Hatakka, M. and Saebo, O. (2019). ‘A holistic perspective on the theoretical foundations for ICT4D research’. Information Technology for Development, 25(1), pp. 7-25. DOI: 10.1080/02681102.2018.1503589

Vinyard, M., Shima, L. and Langbeen, L. (2021). Studying financial inclusion: Asking questions about algorithms. Laterite. Available online: https://www.laterite.com/blog/financial-inclusion-phone-survey-how-to-ask-questions-about-algorithms/

Walsham, G. (2017). ‘ICT4D research: reflections on history and future agenda’. Information Technology for Development, 23(1), pp. 18-41. DOI: 10.1080/02681102.2016.1246406.