This series of articles elucidates the consequences of what limited access to information and the ever-growing spread of misinformation have on the most vulnerable groups of the society.

In the previous blog posts I have discussed cases where access to information has been hindered in one way or another – in some cases the needed technology is not available and in other cases the information is not provided in the desired language. In this post I will address another aspect of said problem: Access to information has been provided but the information is not correct. There is a myriad of technological developments that seek to help vulnerable people in a crisis situation, but the information provided is either not correct or it is misleading. This was a case with a mobile phone application called I Sea, specifically designed to help refugees in distress while crossing the Mediterranean Sea.

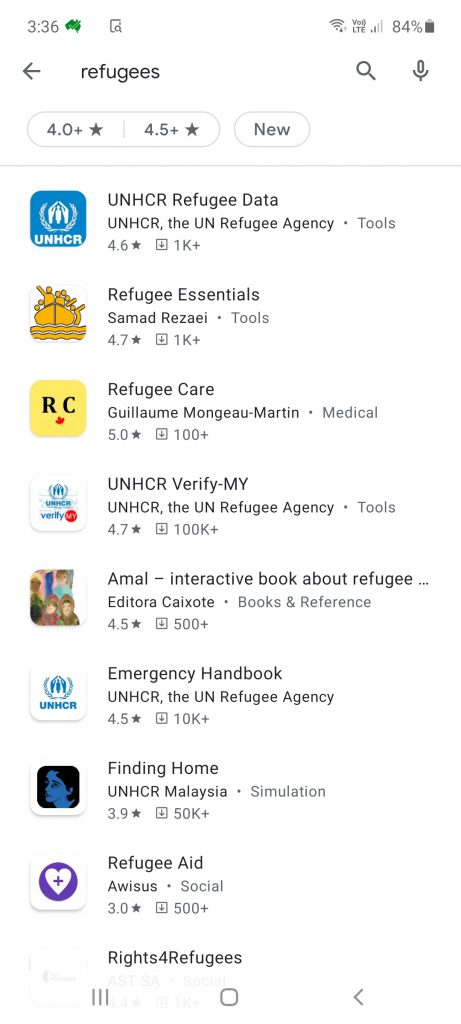

App stores are full of mobile phone applications for anything and everything you can think of and more is developed on a daily basis. Sure, many of the applications are for pure entertainment or practical life hacks but there is a growing interest in tech-for-good projects. Just by typing the word “refugees” to the search bar on Samsung Play Store gives me a list of applications ranging from money transfer to UNHCR apps and from Refugee Essentials to refugees and business opportunities. More and more money is being spent to develop applications that would help to make the world a better place. Could this be only positive? Technology can certainly be used to benefit vulnerable groups but at the same time refugees are inherently under very precarious circumstances which makes the conversation more complex with (the lack of) ethical data regulations.

A couple of years ago, a Singapore based ad agency called Grey Group developed a mobile phone application called I Sea. The application was meant to help refugees who were in trouble crossing the Mediterranen Sea and it even won a Bronze medal at the Cannes Lions conference, though the prize was returned later. The application alleged to feed its users live images from the Mediterranean, allowing them to spot refugees in distress. With the help of live satellite images the user was supposed to identify boats in trouble and flag their location to a Malta based Migrant Offshore Aid Station (Moas) which would then reach out to the refugees to help them.

The purpose of the application surely sounds altruistic; however, it was revealed that it did none of the above. The so-called real-time satellite footage was actually static, unchanging imagery, and the weather forecast was hundreds of kilometres off. It was practically impossible to use any of the data retrieved from the application to help a refugee in distress. The I Sea app seemed to spend more time coming up with a catchy name rather than focusing on saving people’s lives.

Very cleverly the application aimed to use the power of crowdsourcing to solve a huge humanitarian problem; however, it is very problematic that it actually provided the audience with false information. When done ethically, crowdsourcing data means that it is possible to shift the ownership of knowledge from one entity, i.e. a private company, to a larger audience. At a larger lens, for humanitarianism, this could imply that humanitarian actions will no longer belong to the box of Western ‘do-gooding’ but instead become more of a participatory process with a number of owners and directions¹. However, the I Sea app offered very misleading information to the users by providing false satellite images etc. This leaves one wondering that how is it possible that the application was awarded a prize for something it did not even do? Furthermore, if an organisation is not able to check such facts prior to handing out an award, how would an individual be expected to do so?

With the app galore, there are two things to consider. Firstly, who is designing and for whom? Is it a private entity or a governmental actor, and is the target group or a wider audience participating in the planning process? And secondly, how can we reach greater transparency to avoid such misuse of data? Currently, the humanitarian sector lacks comprehensive, common frameworks to safeguard privacy, security and ethics in regard to technology and use of data. If nothing else, there should be a way to conduct due diligence on applications that aim to have an impact on people’s lives.

“Ethical use of technology involves balancing technology’s risks and benefits and managing security and privacy considerations.”

In the next, final post of this series I will continue discussing the development of technological solutions and the lack of privacy regulations around them. Stay tuned!

References

¹Read, R., Taithe, B. & Mac Ginty, R. 2016: Data hubris? Humanitarian information systems and the mirage of technology, Third World Quarterly, 37:8, 1314-1331.