There is a popular saying, which I imagine exists almost in every part of the world, that ‘I only believe in what I see and hear with my own eyes and ears’. It is fairly easy to understand the logic behind (ultimately what can be more trustworthy than our own senses), but is it really the case in 2.0 digital world? I could sincerely argue that it is not and here is a compelling reason why:

“deepfake”, a novel digital technology.

I have encountered this term for the first time in December 2019 when Reuters News Agency, the company I worked for introduced a course called “Identifying and Tackling Manipulated Media”. Indeed, the phenomenon and the term itself is fairly new considering that it has been coined in 2017. But believe that it can be as dangerous as it is new.

As a news reporter, I am rather drawn to this topic because deepfakes have a potential to feed fake news. Journalism, due to different factors such as disinformation and manipulation is already under scrutiny of credibility; thus, it is vital especially as a journalist to know the threat and learn how to tackle it. (Unesco, n.d.) But this is obviously not only and should not be restricted to media people, this is something that broader public should be aware of in order not to be deranged by fake content aimed at stirring things up, which can end up destabilizing societies and democracy.

With no further ado, it is high time for me to explain the deepfake technology that (as I stated) could make our eyes and ears inevitably unreliable witnesses:

The term deepfake is a combination of words “deep-learning” and “fake”.

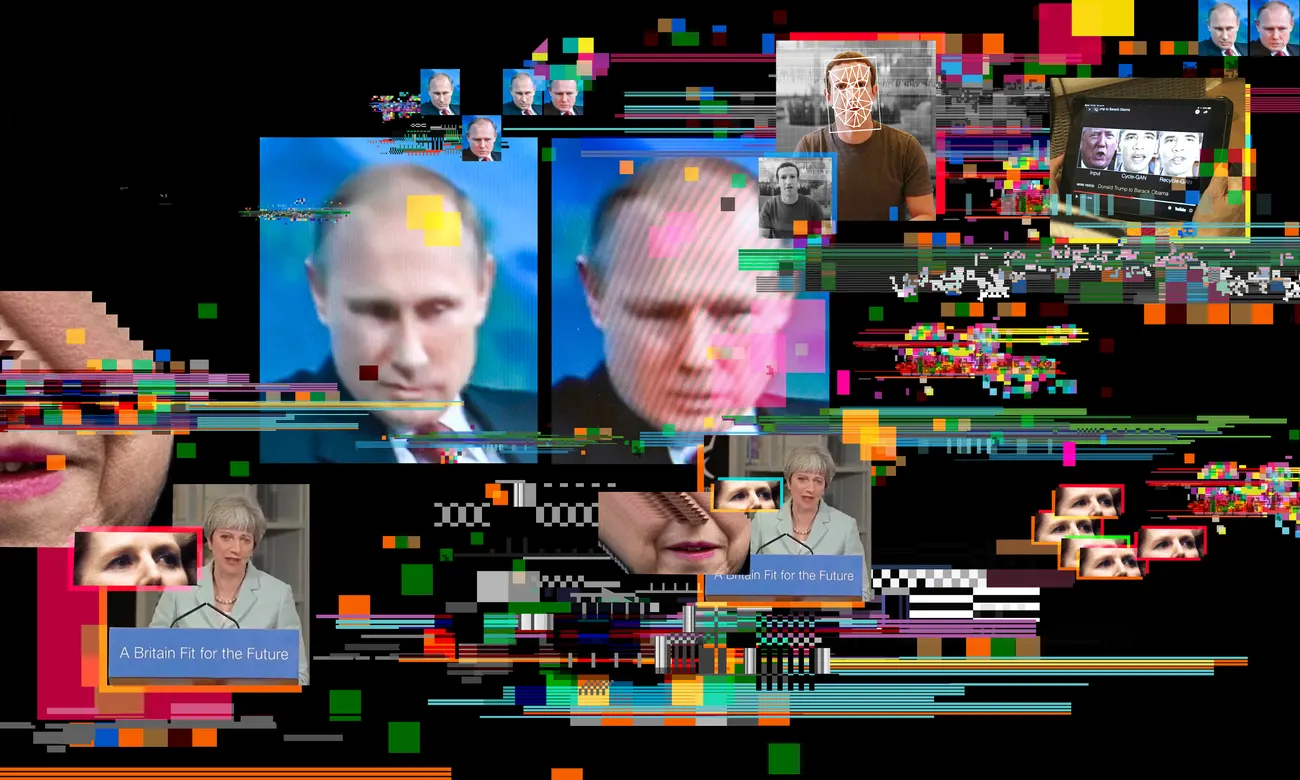

Deepfakes are “hyper-realistic videos that apply artificial intelligence (AI) to depict someone say and do things that never happened” (Westerlund, 2019).

Deepfakes use facial mapping technology and AI to swap face of a person on a video onto the face of somebody else. What makes them harder to identify lies behind their usage of real footage and authentic sounds.

In terms of creative and commercial purposes, this technology can be beneficial in advertising and film-making. (Reuters, n.d.) However, in the hands of ill-intentioned actors they can undermine the credibility of media, disseminate misinformation and create an environment of distrust. They can even be more dangerous in developing countries that falls behind on digital literacy.

When deepfakes became popular two years ago especially with celebrities faces being put on porno videos, their scope was limited to targeted people (Thomas, 2020). But, with the technology improving at a very fast pace, deepfakes are becoming more and more common while targeting politicians, corporates and authorities. On top of that, deepfake creation tools are freely available online, which makes it possible for even a teenager to create and distribute a deepfake video by using a smartphone. (Huston & Bahm, 2020)

Sounds very dystopian but with convincing deepfake videos composed with some expertise, it can be possible to manipulate markets, originate false evidence and affect public opinions.

Let’s think about elections! US presidential election is just around the corner; fake news would be already pouring mostly via social media; now imagine people also being exposed to rather realistic deepfake videos of politicians. Imagine there is no way to distinguish what is fake or not with your bare eyes. What is more, when there is lack of trust, it would be easy to claim something as fake to discredit citing deepfake technology. How would it be proved otherwise?

As apocalyptic as it feels, the situation is still not hopeless.

There are several effective ways to tackle the problem. First of all, regulation and legislation can be imposed. Digital literacy and critical thinking can be included in curriculums to raise awareness even from early ages. With the help of evolving technology, algorithms can be improved to develop deepfake detecting technologies.

All these possible solutions when combined would be efficient to overcome the ‘side effects’ of deepfakes in time. But it is important to identify the problem first in order to act on it. Thus, in the meantime knowing the threat, not believing everything we see blindly and judging the daily input we receive from traditional media or social media or indeed from any source critically would be the best response as individuals we can have.

Have you ever come across deepfake videos? What was your initial response? Please share your thoughts in the comments!