Part 02 of the learning curve about Big Data for dummys (like myself)

How can algorithms, big data and AI cause biases and unfairness?

Data reflects existing biases, unbalanced classes in training data, data that doesn’t capture the right value, data that is amplified by feedback loops, and malicious data. Let me try to explore, what that means.

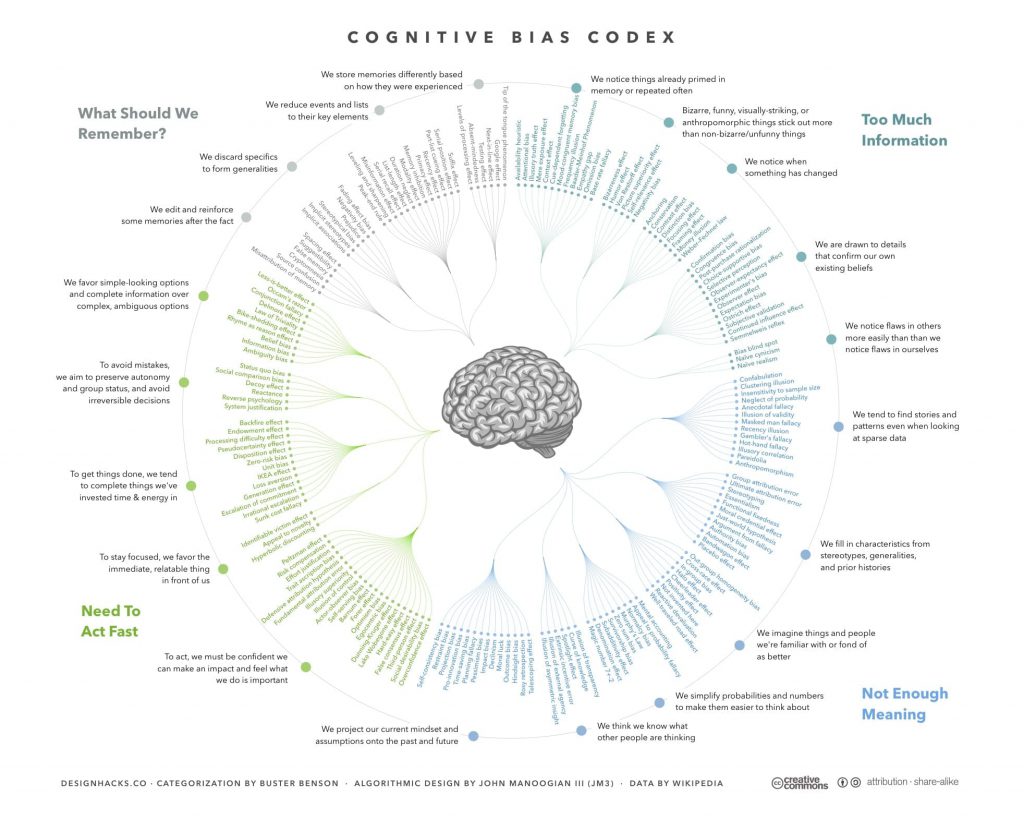

Now bias itself isn’t necessarily a terrible thing, our brains often use it to take shortcuts by finding patterns, but bias can become a problem if we don’t acknowledge exceptions to patterns or if we allow it to discriminate. The infographic below is showing that we are all biased in one way or the other. So, using data from existing biases could even spread more biases. These biases affect belief formation, reasoning processes, business and economic decisions, and human behavior in general. The infographic below represents a really interesting overview of these biases.

We humans take shortcuts remembering and processing data and constructing meaning.

There are 4 qualities which lead to limit every human’s intelligence:

- Too much information to process for our brain

- Generating meaning and connecting the dots is difficult

- Not enough time in the moment/day/lifetime to thoroughly consider and analyze all possibilities

- Not enough space in our brains,so we try to generalize, or identify patterns, to save some space

Here is an example of Google’s algorithms:

Google image search for captain and programmer (left and right) shows predominantly white males. While the search for nurse shows predominantly white females.

Credit: Screenshots of Google images taken on 27. 09.2021 by the author

So, if I am a male person of colour and would like to become a nurse, my search with google images might create the impression that this job is not available for me. Vice versa for a female who wants to get into stirring aero planes as a captain or get into the IT business as a programmer this seems out of reach.

A search engine is an algorithm that takes a search query as an input and searches its database for items relevant to the words in the query. It then outputs the results. So, algorithms might discriminate against people who don’t meet the average characteristics. But, protected classes may emerge as correlated features, which are features that may be unintentionally correlated to a specific prediction.

AI bias is the underlying prejudice in data that is used to create AI algorithms, which can ultimately result in discrimination and other social consequences. As discrimination undermines equal opportunity and amplifies oppression, this creates data injustices. Since there is an exponential rise in data driven technology, worldwide data injustice is a global phenomenon. (Linnet Taylor, 2017) Thus, I am trying to explore which effect on development data injustices can have. I will explore Data Injustices in my soon-to-come post Part 04 of the learning curve about Big Data for dummys (like myself).

References

Linnet Taylor, 2017: What is data justice? The case for connecting digital rights and freedoms globally. Sage Publishing.

Cognitive Biases https://medium.com/thinking-is-hard/4-conundrums-of-intelligence-2ab78d90740f

https://medium.com/message/harassed-by-algorithms-f2b8229488df

https://medium.com/message/how-facebook-s-algorithm-suppresses-content-diversity-modestly-how-the-newsfeed-rules-the-clicks-b5f8a4bb7bab